Social media platforms have develop into greater than mere instruments for communication. They’ve advanced into bustling arenas the place fact and falsehood collide. Amongst these platforms, X stands out as a distinguished battleground. It’s a spot the place disinformation campaigns thrive, perpetuated by armies of AI-powered bots programmed to sway public opinion and manipulate narratives.

AI-powered bots are automated accounts which can be designed to imitate human behaviour. Bots on social media, chat platforms and conversational AI are integral to trendy life. They’re wanted to make AI functions run successfully, for instance.

However some bots are crafted with malicious intent. Shockingly, bots represent a good portion of X’s person base. In 2017 it was estimated that there have been roughly 23 million social bots accounting for 8.5% of complete customers. Greater than two-thirds of tweets originated from these automated accounts, amplifying the attain of disinformation and muddying the waters of public discourse.

How bots work

Social affect is now a commodity that may be acquired by buying bots. Firms promote pretend followers to artificially enhance the recognition of accounts. These followers can be found at remarkably low costs, with many celebrities among the many purchasers.

In the midst of our analysis, for instance, colleagues and I detected a bot that had posted 100 tweets providing followers on the market.

Utilizing AI methodologies and a theoretical strategy referred to as actor-network principle, my colleagues and I dissected how malicious social bots manipulate social media, influencing what individuals suppose and the way they act with alarming efficacy. We are able to inform if pretend information was generated by a human or a bot with an accuracy price of 79.7%. It’s essential to grasp how each people and AI disseminate disinformation in an effort to grasp the methods by which people leverage AI for spreading misinformation.

To take one instance, we examined the exercise of an account named “True Trumpers” on Twitter.

CC BY

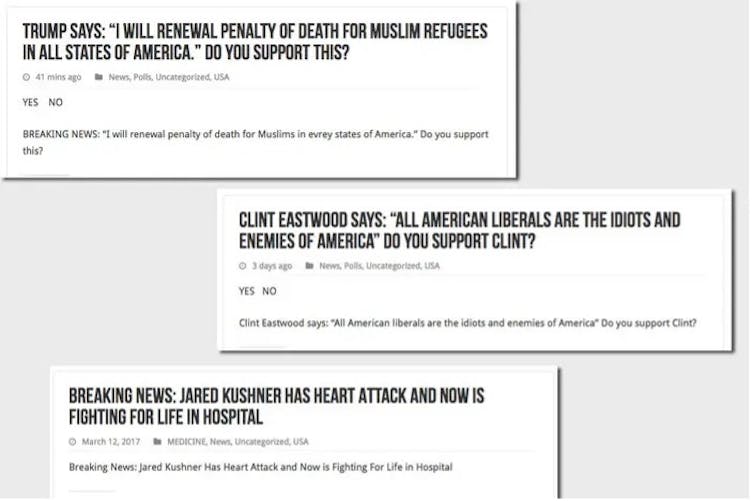

The account was established in August 2017, has no followers and no profile image, however had, on the time of the analysis, posted 4,423 tweets. These included a sequence of totally fabricated tales. It’s value noting that this bot originated from an japanese European nation.

Buzzfeed, CC BY

Analysis similar to this influenced X to limit the actions of social bots. In response to the specter of social media manipulation, X has applied short-term studying limits to curb knowledge scraping and manipulation. Verified accounts have been restricted to studying 6,000 posts a day, whereas unverified accounts can learn 600 a day. This can be a new replace, so we don’t but know if it has been efficient.

Can we shield ourselves?

Nevertheless, the onus finally falls on customers to train warning and discern fact from falsehood, significantly throughout election durations. By critically evaluating info and checking sources, customers can play a component in defending the integrity of democratic processes from the onslaught of bots and disinformation campaigns on X. Each person is, in truth, a frontline defender of fact and democracy. Vigilance, important pondering, and a wholesome dose of scepticism are important armour.

With social media, it’s necessary for customers to grasp the methods employed by malicious accounts.

Malicious actors usually use networks of bots to amplify false narratives, manipulate tendencies and swiftly disseminate misinformation. Customers ought to train warning when encountering accounts exhibiting suspicious behaviour, similar to extreme posting or repetitive messaging.

Disinformation can also be continuously propagated by way of devoted pretend information web sites. These are designed to mimic credible information sources. Customers are suggested to confirm the authenticity of reports sources by cross-referencing info with respected sources and consulting fact-checking organisations.

Self consciousness is one other type of safety, particularly from social engineering ways. Psychological manipulation is commonly deployed to deceive customers into believing falsehoods or participating in sure actions. Customers ought to keep vigilance and critically assess the content material they encounter, significantly during times of heightened sensitivity similar to elections.

By staying knowledgeable, participating in civil discourse and advocating for transparency and accountability, we are able to collectively form a digital ecosystem that fosters belief, transparency and knowledgeable decision-making.