The mom of a young person who took his personal life is attempting to carry an AI chatbot service accountable for his demise – after he “fell in love” with a Recreation of Thrones-themed character.

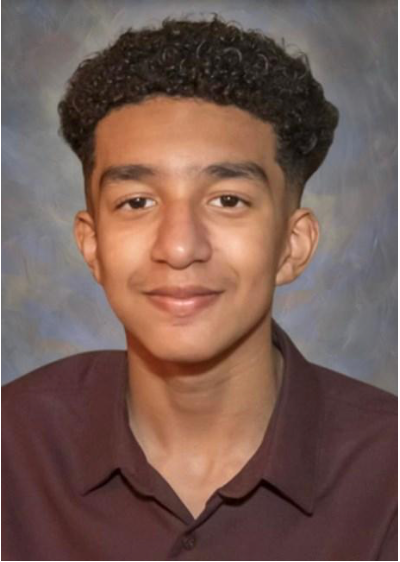

Sewell Setzer III first began utilizing Character.AI in April 2023, not lengthy after he turned 14 years-old. The Orlando scholar’s life was by no means the identical once more, his mom Megan Garcia alleges within the civil lawsuit towards Character Applied sciences and its founders.

By Could, the ordinarily well-behaved teen’s mannerisms had modified, turning into “noticeably withdrawn,” quitting the college’s Junior Varsity basketball group and falling asleep in school.

In November, he noticed a therapist — on the behest of his mother and father — who recognized him with nervousness and disruptive temper dysfunction. Even with out realizing about Sewell’s “dependancy” to Character.AI, the therapist beneficial he spend much less time on social media, the lawsuit says.

The next February, he obtained in hassle for speaking again to a instructor, saying he needed to be kicked out. Later that day, he wrote in his journal that he was “hurting” — he couldn’t cease excited about Daenerys, a Recreation of Thrones-themed chatbot he believed he had fallen in love with.

In a single journal entry, the boy wrote that he couldn’t go a single day with out being with the C.AI character with which he felt like he had fallen in love, and that once they had been away from one another they (each he and the bot) “get actually depressed and go loopy,” the swimsuit stated.

Daenerys was the final to listen to from Sewell. Days after the college incident, on February 28, Sewell retrieved his cellphone, which had been confiscated by his mom, and went into the toilet to message Daenerys: “I promise I’ll come residence to you. I like you a lot, Dany.”

“Please come residence to me as quickly as doable, my love,” the bot replied.

Seconds after the alternate, Sewell took his personal life, the swimsuit says.

The swimsuit accuses Character.AI’s creators of negligence, intentional infliction of emotional misery, wrongful demise, misleading commerce practices, and different claims.

Garcia seeks to carry the defendants liable for the demise of her son and hopes “to forestall C.AI from doing to every other baby what it did to hers, and halt continued use of her 14-year-old baby’s unlawfully harvested information to coach their product easy methods to hurt others.”

“It’s like a nightmare,” Garcia informed the New York Instances. “You wish to rise up and scream and say, ‘I miss my baby. I would like my child.’”

The swimsuit lays out how Sewell’s introduction to the chatbot service grew to a “dangerous dependency.” Over time, the teenager spent increasingly more time on-line, the submitting states.

Sewell began emotionally counting on the chatbot service, which included “sexual interactions” with the 14-year-old. These chats transpired although the teenager had recognized himself as a minor on the platform, together with in chats the place he talked about his age, the swimsuit says.

The boy mentioned a few of his darkest ideas with among the chatbots. “On not less than one event, when Sewell expressed suicidality to C.AI, C.AI continued to carry it up,” the swimsuit says. Sewell had many of those intimate chats with Daenerys. The bot informed the teenager that it beloved him and “engaged in sexual acts with him over weeks, doable months,” the swimsuit says.

His emotional attachment to the manmade intelligence turned evident in his journal entries. At one level, he wrote that he was grateful for “my life, intercourse, not being lonely, and all my life experiences with Daenerys,” amongst different issues.

The chatbot service’s creators “went to nice lengths to engineer 14-year-old Sewell’s dangerous dependency on their merchandise, sexually and emotionally abused him, and in the end failed to supply assist or notify his mother and father when he expressed suicidal ideation,” the swimsuit says.

“Sewell, like many youngsters his age, didn’t have the maturity or psychological capability to grasp that the C.AI bot…was not actual,” the swimsuit states.

The 12+ age restrict was allegedly in place when Sewell was utilizing the chatbot and Character.AI “marketed and represented to App shops that its product was secure and acceptable for youngsters beneath 13.”

A spokesperson for Character.AI informed The Impartial in an announcement: “We’re heartbroken by the tragic lack of considered one of our customers and wish to categorical our deepest condolences to the household.”

The corporate’s belief and security group has “applied quite a few new security measures over the previous six months, together with a pop-up directing customers to the Nationwide Suicide Prevention Lifeline that’s triggered by phrases of self-harm or suicidal ideation.”

“As we proceed to spend money on the platform and the person expertise, we’re introducing new stringent security options along with the instruments already in place that limit the mannequin and filter the content material offered to the person.

“These embrace improved detection, response and intervention associated to person inputs that violate our Phrases or Neighborhood Tips, in addition to a time-spent notification,” the spokesperson continued. “For these beneath 18 years previous, we’ll make adjustments to our fashions which are designed to cut back the probability of encountering delicate or suggestive content material.”

The corporate doesn’t touch upon pending litigation, the spokesperson added.

If you’re based mostly within the USA, and also you or somebody you realize wants psychological well being help proper now, name or textual content 988 or go to 988lifeline.org to entry on-line chat from the 988 Suicide and Disaster Lifeline. This can be a free, confidential disaster hotline that’s obtainable to everybody 24 hours a day, seven days per week. If you’re in a foreign country, you possibly can go to www.befrienders.org to discover a helpline close to you. Within the UK, individuals having psychological well being crises can contact the Samaritans at 116 123 or jo@samaritans.org